Better AWS CloudWatch Exporter: Ingestion Architecture Overview

How to leverage AWS CloudWatch Metric Streams to build a Prometheus exporter for AWS metrics: overall ingestion architecture, pros and cons versus using the CloudWatch API.

Cross-Post: LinkedIn

In my previous post I discussed what I found was lacking in the current tool ecosystem to be able to successfully monitor AWS services.

This will be the first of several posts where I will start exploring and explaining different facets of a tool I'm developing to help fill in those gaps. In this one, I will be focusing on the ingestion mechanism.

The Pain Points

As we've seen, the two main tools that exist today rely on AWS CloudWatch API to retrieve the metrics. Because of this polling mechanism, they both require the customer to configure the refresh interval as well as the lookback period to use in each retrieval. The way it works leads to several non-optimal behaviors. Setting a short refresh interval can lead to API throttling or the full set of metrics cannot be retrieve within that interval. A large interval reduces the freshness of the data and can lead to reaction lags when monitoring based on those.

Also, because AWS doesn't expose the metrics gathered immediately through the CloudWatch API, it can lead to the polling not being able to retrieve values for recent periods leading to gaps in the metrics time-series.

Managing all those knobs is quite painful and balancing all the configurations so that the metrics are retrieved consistently, correctly and with the least amount of lag possible is not easy. It also needs frequent re-evaluation as the infrastructure size changes as well as CloudWatch behavior changes.

Improved Ingestion Architecture: Leveraging CloudWatch Metrics Streams

Fortunately, AWS provides a push mechanism to get AWS CloudWatch metrics as they are generated. These are CloudWatch Metrics Streams. With a CloudWatch stream we can have AWS deliver the metrics as they are generated to a Firehose which itself will deliver them to an HTTP endpoint for them to be processed.

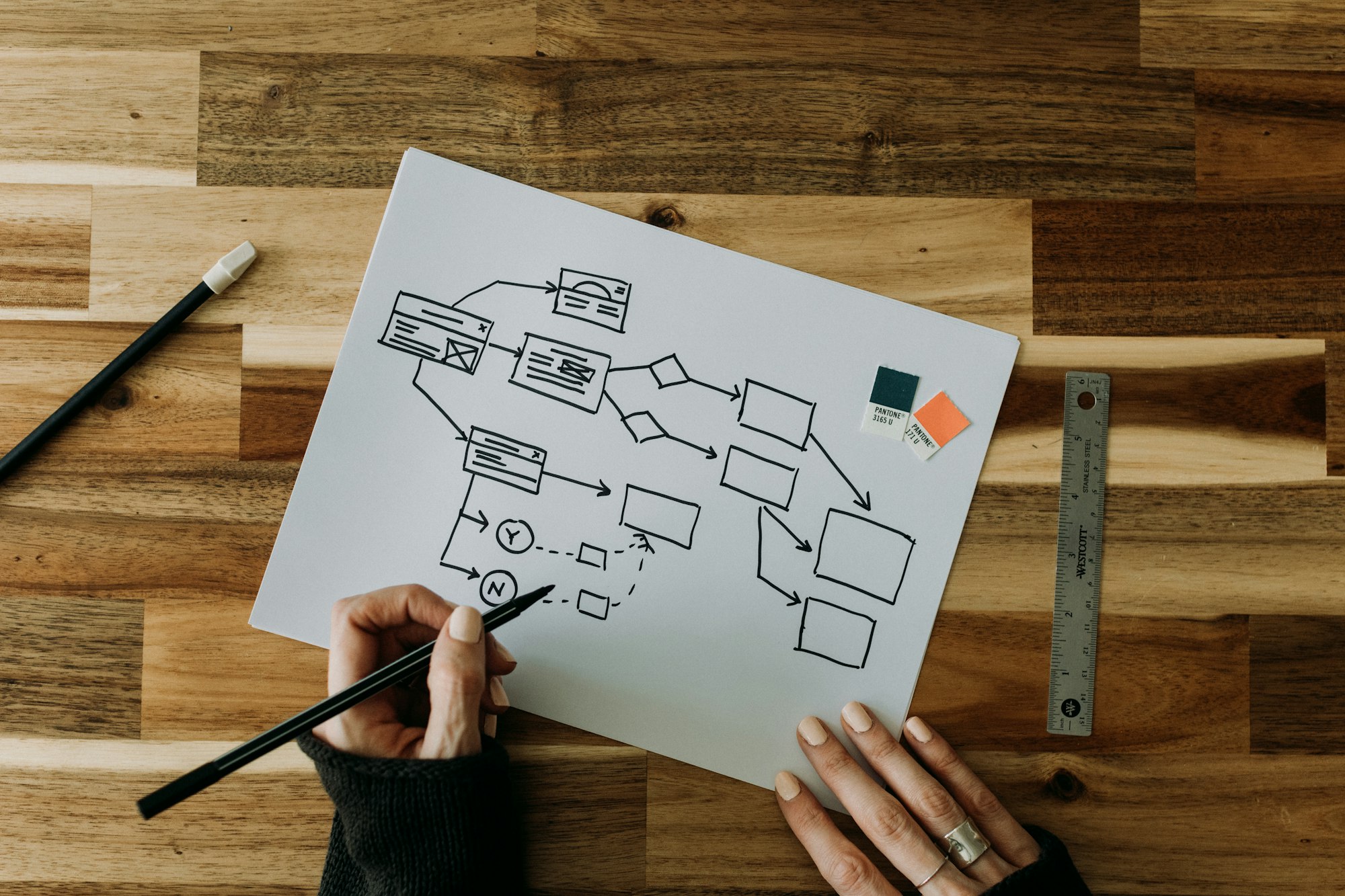

The ingestion architecture for the exporter looks like this:

The CloudWatch Stream acts as the generator of metrics while the AWS Firehose acts as the batching and dispatching mechanism to the New CloudWatch Exporter's HTTP endpoint.

Pros and Cons of Using CloudWatch Streams

CloudWatch Streams were introduced in 2021[1] and it is the preferred mechanism to continuously retrieve metrics generated by AWS CloudWatch. They provide near real-time-delivery and a very low latency, specially compared to the polling mechanism.

By leveraging CloudWatch Streams the exporter gets several advantages over the traditional polling mechanisms:

- Low latency from metric update to metric processing and making it available for Prometheus to scrape it. Lag will be introduced by Firehose batching, but that can be capped at 60 seconds and depending on the volume it can actually be less than that.

- No unnecessary polls for stale or not-generated metrics. The polling mechanism is forced to attempt to retrieve metrics that might either not have changed or not generated at all. Not all CloudWatch metrics are generated by default, some are only generated when certain events happen, for these metrics the Stream will not issue updates unless there are.

- Generated metrics are configured at the source. In the existing solutions one needs to configure the exporter with the metrics one wants to export and some metric discovery criteria. CloudWatch streams has that configuration now and as new metrics are generated that match the configuration, they will be forwarded to the exporter through Firehose.

- It removes the risks of hitting the CloudWatch API throttling limit. It is quite common for large infrastructure footprints to hit this when using the existing exporters, thus requiring to fine tune the concurrency and the update interval to stay within limits.

- It standardizes the ingestion format for the new exporter. Firehose HTTP request format is fairly standard but the important piece is that the CloudWatch metrics are exported in the OpenTelemetry 0.7.0 standard format.

The cons of using CloudWatch Stream is that it requires more setup work:

- Hosting the New CloudWatch Exporter where Firehose can reach it and enable HTTPS for the endpoint.

- Compared to the current exporters which can be run anywhere with access to the AWS CloudWatch API, this setup requires setting up several pieces of infrastructure with appropriate amazon policies: CloudWatch Stream, Firehose and an S3 Bucket.

Overall, if you and your business relies on AWS infrastructure, the value that can be extracted from the new ingestion architecture overweights the cons of having more pieces to setup.

As always, stay tuned for more updates on this project as I dig into the internals on the improvements that can be made in the metric conversion per-se.

Please leave any comments down below and if you are using CloudWatch metrics (and Prometheus): which set of CloudWatch metrics would you be most interested in seeing properly translated? Also, feel free to reach out through PM.

Comments powered by Talkyard.