Understanding the Operational Costs of Rusty CloudWatch Exporter

An analysis of Rusty CloudWatch Exporter's operational expenses, emphasizing how its architecture influences the costs associated with fetching metrics from AWS, both in nominal terms and in comparison with alternative mechanisms.

Cross-Post: LinkedIn

Understanding the impact of deploying monitoring tools on operational costs is essential for making informed decisions in cloud infrastructure management. In this blog post, we delve into the specifics of Rusty CloudWatch Exporter's (Full Disclosure: my personal project) design and explore how it influences operational costs. By arming potential users with this knowledge, we aim to facilitate a deeper understanding of whether this tool aligns with their budgetary constraints and operational requirements.

Outside of the scope of this post is to analyze the infrastructure used to host the exporter as there are so many variations with completely different profiles that wouldn't be feasible to analyze. Thus, we are going to focus on the costs of using the streaming architecture to get the metrics out from AWS CloudWatch into the exporter. Let's dive in...

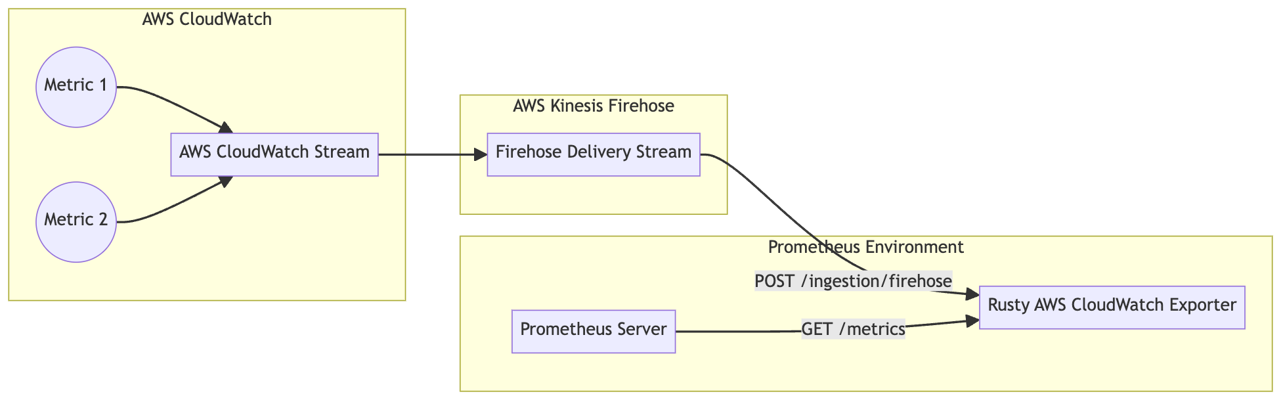

The starting place to do the analysis is from the overview of how Rusty CloudWatch Exporter fits into the AWS ecosystem to do the metric translation:

As the exporter uses the CloudWatch Metric Stream mechanism along with an HTTP Endpoint, the operational costs will primarily come from three items: Metric Stream costs, Firehose Ingestion costs and Firehose Outbound Data Transfer costs.

CloudWatch Metric Streams pricing[1] is really straight-forward: there's a fixed charge per metric update - in most regions this is $0.003 per 1,000 metric updates.

A metric update will contain enough data to calculate sum, min, max, average and sample count. If other statistics such as percentiles want to be added to a metric it will add an extra metric update for each extra 5 statistics.

How often a metric will get an update depends on the metric itself and, sometimes, on how the services emitting metrics are configured. The information can usually be found in each of the service's metrics documentation page. For example, for DynamoDB only 14 metrics are updated at the minute frequency while most of them are updated in 5-minute intervals[4]. And to make things a bit more complicated some metrics won't trigger an update unless a specific condition happens: the HTTPCode_Target_5XX_Count will only emit an update if a target generated a 5XX response in the 60-second period.

The two other charges are based on the amount of data that the Firehose delivery stream has to handle and this is strictly correlated to the number of metric updates that are being shipped.

The size of each update will vary based on the number and length of tags and other elements within the structure, but based on own and others observations, a 1kb per update is a fair estimate.

The Firehose Ingestion Costs are straight-forward, there is a flat-fee per GB ingested that will depend on the region[2]; for Oregon it is $0.029/GB. Using the aforementioned estimate, we get that the cost is going to be around $0.029 per 1M metric updates.

The Firehose Outbound Data Transfer costs are contingent upon the deployment location of the Rusty AWS CloudWatch Exporter and its accessibility from Firehose. If the exporter operates outside of AWS, outbound internet traffic costs are incurred. If it operates in a different region from Firehose, inter-region costs apply, whereas intra-region costs are applicable if they are in the same region. These costs[3] typically amount to $0.09/GB, $0.02/GB, and $0.01/GB, respectively, in the most common regions. Additionally, if the exporter is accessed through a VPC, an extra fixed-priced fee of $0.01/hr or approximately $7/month, alongside the $0.01/GB, is levied. Assuming no compression, these figures can be extrapolated to per 1M metric updates.

Adding up all the different items we get that the overall cost per 1M metric updates will be between $3.03 and $3.119. As noted, the actual cost will change based on other factors but, as the bulk of the costs come from the metric streams, the variation will not be substantial. From here to the cost per metric is a simple math calculation: 1-minute frequency metrics will have 43,200 updates per month while 5-minute ones will have only 8,640 updates per month yielding a cost of ~$0.13176 and ~$0.02635 respectively.

How Does It Compare to YACE?

Given that YACE is widely regarded as the default exporter for AWS CloudWatch metrics, it's pertinent to compare its operating costs with those of our exporter. The primary distinction between them lies in their methods of retrieving metrics from AWS: one utilizes Metric Streams, while the other leverages the CloudWatch API.

The way that YACE works is by, first, doing a ListMetrics for each the different metric names it has been configured to retrieve and then issuing either a GetMetrics or GetMetricStatistics for each of the metric instances found in the list call.

It is important to notice that the ListMetrics will return all instances that have generated data recently but not necessarily for the period that is being scraped.

What this means is that if a metric hasn't been updated it will still be listed and scraped yielding a NaN value.

The API call pricing is $0.01 per 1,000 requests for the ListMetrics and each API call can return up to 500 metrics. Independently of which API is used to retrieve the metric values, the cost is the same: $0.01 per 1,000 metrics with up to 5 aggregations or stats in each.

In the case of a 1-minute frequency metric, this translates to $0.43286/month. $0.432 coming from issuing 43,200 GetMetric API calls and $0.00086 from its share of the ListMetrics calls.

If we are using a 5-minute frequency scraping, the cost will be a fifth of that or $0.08657 per metric per month.

In either case, the operating costs for retrieving the metrics is significantly lower when using the streaming architecture versus the API mechanism. Furthermore, YACE will scrape all metrics at the same interval independently of how frequently they are updated, potentially making 5-minute metrics scraping costs as high as 1-minute frequency metrics.

Where YACE has the upper hand, though, is that it allows reducing the update frequency of the metrics. That is, it can be configured to scrape 1-minute update frequency metrics every 10 mins thus reducing its scraping cost; something not possible when using CloudWatch Streams.

Wrap Up

By using the streaming architecture, we are able to reduce the costs of getting the metrics out of AWS in order to do the translation into a Prometheus compatible format while improving the ingestion delay.

Additionally, through this analysis, we provide an estimation of the anticipated operational costs associated with running the exporter. This empowers you to make informed decisions regarding the feasibility of integrating the exporter into your budget, mitigating the risk of unexpected expenses down the line.

Got any thoughts, questions, or additional insights? I'd love to hear from you!

Sources

[1] Amazon CloudWatch Pricing

[2] Amazon Data Firehose Pricing

[3] Amazon EC2 On-Demand Pricing

[4] DynamoDB Metrics and Dimensions

Comments powered by Talkyard.